A new way of understanding and regulating privacy may be necessary to protect individuals’ sensitive information, as journalists and other data practitioners increasingly head to online social networks data to study and explain social phenomena.

Currently, most online social networks (OSNs) let their users customize their privacy settings, allowing them to hide or reveal information such as their gender and location. As we explain in the following article, the inherent characteristics of OSNs, through the practice of predictive analytics, allow them to bypass individuals’ privacy choices. This means that the way individuals’ privacy is protected - and technically guaranteed - requires fundamental changes.

We believe that solving this problem is becoming increasingly important, as the data generated by OSNs is considered extremely valuable by companies as well as by academic researchers and journalists, as it provides information about human behavior and the functioning of society.

What are online social networks?

As researchers Boyd and Ellison explained in 2007, OSNs have three fundamental characteristics that distinguish them from other online data repositories (e.g. forums and other types of websites):

- User profiles are public or semi-public

- They contain a network structure that connects people to each other

- The connections are visible to both the connected users and the network as a whole

Crucially, the public visibility of connections that allows OSNs to compromise individual privacy, as we will explain below.

Understanding privacy

Before we delve deeper into OSNs, it is important to understand what privacy is and what expectations are associated with it. A common definition of the term is given by Westin in 1970, who describes it as ”the ability for people to determine for themselves when, how, and to what extent information about them is communicated to others”.

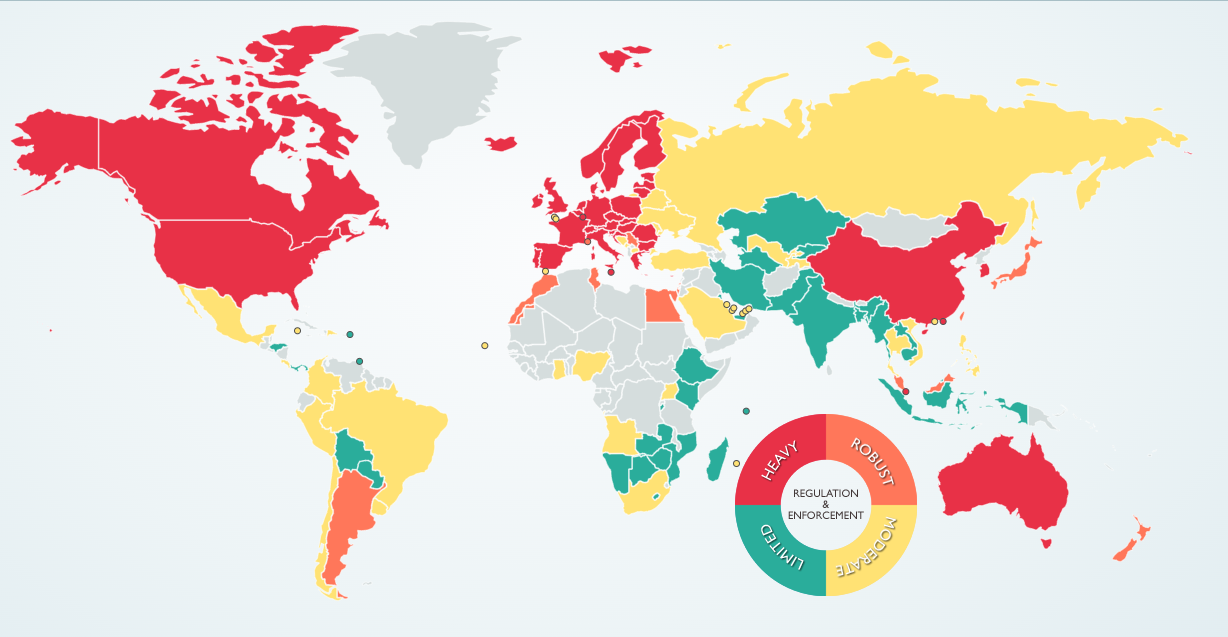

In many countries, privacy is understood as a fundamental citizen right. Privacy International reports that there are around 130 countries worldwide granting the right to privacy to their citizens. Above individual countries however, international and cross-border unions and political entities recognize privacy as a human right (e.g. the UN’s Declaration of Human Rights (UDHR) 1948, Article 12) .

The extent of privacy regulation and enforcement around the world. Source: DLAPiperDataProtection

The extent of privacy regulation and enforcement around the world. Source: DLAPiperDataProtection

In many countries, the right to privacy explicitly extends to, and otherwise indirectly encompasses, the right to personal data protection. This essentially indicates the need to handle personal data with care, and the unlawfulness of exposing individual’s personal data without their explicit consent.

Why are OSNs a risk to individual privacy?

Consider the example of a person using a credit card service: as a purchase takes place, personal information of the individual such as the purchased service or product, time, location, etc. are collected. This process takes place and is in isolation from the actions of other customers using credit card services. Instead, OSNs are infused with the social interaction of individuals connected in the virtual space. With respect to privacy, it is the connection of individuals in the network that acts as a source of personal exposure. OSNs contain large amounts of data and have an increasing number of users. Those two features of OSNs have made them a popular source of data, particularly in light of the emerging practice of predictive analytics. Predictive analytics describes the extraction of data and its consequent mining, seeking for patterns and information that is generated using statistical and mathematical techniques, such as community detection, dimensionality reduction and social network analysis (Mishra and Silakari, 2012). There are three key elements of OSNs that make them the ideal playground for predictive analytics:

- social network features

- user-generated textual content

- location-based information.

It is in these three areas that risks to individual personal data safety originate.

Social network features

A widely studied phenomena in social science is that of homophily, also described as “birds of feather flock together”. Homophily describes a pattern where those individuals in a social network who are connected by ties are on average more similar to one another than to those with whom no ties are shared (McPherson et al., 2001). Homophily is found in the digital world just as much as in the real world. How this translates to OSNs is that individuals are significantly more similar to their connections than to their non-connections (Gayo-Avello et al., 2011). This finding has been at the heart of predictive analytics, and it carries with itself enormous consequences for individual privacy.

For example, in a study (Mislove et al. 2010) OSN data is mined and the information about a person’s peers is used to predict the characteristics of that given individual. Following this study, more research emerged that presented statistical models able to draw inferences from social ties to the personal attributes of an individual, all with high levels of certainty in prediction (e.g. Gayo- Avello et al., 2011; Sarigol et al., 2014).

But there is more to it. In another research, Zhelva et al. (2009), showed that personal attributes can also be inferred from group-level structures in OSNs. Using group-level classification algorithms, their statistical model is capable of discovering group-structures within friendship networks and respectively identifying groups an individual belongs to. What this means is that the publicly observable similarity of group members allows to infer attributes of individuals in the group who have chosen to instead hide their personal information.

And yet another approach exists. This time, it is the diversity in the social ties, rather than homophily, that drives the prediction. A study shows that diversity in social ties can be used to infer a person’s romantic partner. Compared to the methods above, the authors develop a more nuanced understanding of ties by measuring tie strength and further tie-related characteristics and use those to predict yet another tie-related feature. But what if you did not want to disclose your relationship to the OSN? This study shows that your preference does not matter.

User-generated textual content

Language is another tool for OSN-based inferencing. Research in sociolinguistics shows that the way we speak, in the vocabulary we use, the topics we bring up, and even in our usage of punctuation, tend to correlate by means of individual-level attributes (Labov, 1972; Coates, 1996; Macauley, 2005). Language serves the purpose of communicating to others just as it operates as a tool for status display, and proof of belonging and identification with particular groups. Also, we are likely to express shared identities with others throughout common expressions or slang, may those be ethnicity, religion, gender, or social class. This means that language is predictable, and thus can be used for prediction of personal characteristics. The availability of publicly available text uploaded by users in OSNs creates powerful datasets for the development of classifier-algorithms and models that place individuals in groups by their linguistic choices and style. A study by Rao et al. (2010) uses a large Twitter dataset and machine learning to train a classification algorithm and successfully infer user characteristics such as gender, age, political orientation, and religion.

Location-based information

The last dimension of OSNs for inference is geographic information. From the home address to the precise location of a person in the present moment, unveiling such private details of an individual means accessing information that can be used to learn about a person’s whereabouts or deliver more effective advertising, exploiting personalization of content and timeliness of advertisement delivery. The sensitivity of geographical personal information is recognized by companies too, reflected in Foursquare’s homepage slogan: “With uncompromising accuracy, accessibility, scale, and respect for consumer privacy, Foursquare is the location platform the world trusts” (Foursquare, 2020). A study by Pontes et al. (2012) avails of data from Foursquare, Google+ and Twitter to infer an individual’s home location by using publicly available geographic information uploaded by the users, as well as the users’ friends disclosed home location. The developed model had an accuracy of 74%, and for a smaller subset of users the home residence was inferred within a six kilometers radius with accuracy of 60% for Foursquare and Twitter, and just around 10% for Google+.

Further research has reversed the mechanism and used available geographic information to infer individuals’ social relations. Using Flickr data, Crandall et al. (2010) built an inference model of social ties based on geographic co-occurrences. Their research answers the question: when two people appear in nearby locations a given number of times, what is the likelihood that they know each other? The research shows that it takes very few co-occurrences to infer the underlying social network structure of individuals.

Why does this matter?

The task of inferring individual private characteristics carries with itself ethical concerns. Particularly, research using tools to bypass users’ privacy choices and unmask users’ characteristics for the purpose of ”advertising, personalization, and recommendation” (Rao et al., 2010:44), requires careful considerations. In their paper, Rao et al. (2010) state as a justification for the study the interest of inferring attributes users have chosen to keep private, but how can stripping individuals of their protected identity be justified when it is deliberately undisclosed, particularly in the aim of delivering them targeted advertising? The lack of ethical considerations by Rao et al. (2010), particularly the risk of compromising an individual’s privacy, is concerning. Their research provides an example of predictive analytics where the inferential practice is done without (i) an ethical framework posing boundaries to the study and (ii) a clear indication of how subjects are protected and defended in their right to privacy.

One issue with predictive analytics is that the lines around informed consent can become blurry. Informed consent has been a pillar of the moral framework guiding research ever since the Nuremberg Code of 1947. In Big Data ethics, a central challenge is defining informed consent. What type of practices are subjects informed about? Is publicly accessible information ethically acceptable to use for research? Does the same apply when it is used to create new information about an individual and his connections? In informed consent, transparency is crucial, and the subjects of an inferential study should always know that their data is and can be used that way.

Another chilling factor is the fact that OSN companies themselves do not disclose what they do to generate advertising revenue or improve their platforms, but predictive analytics seems to play a role. In recent years, the controversial practice of building shadow profiles on the part of OSNs has been at the center of an ethical debate. Shadow profiles describe the usage of predictive models by OSNs to collect personal information a user does not disclose. These are maintained privately by OSN providers aside of user agreement, permission, or terms of service. The first mention of shadow profiles was with Facebook, in 2013(Blue, 2013), which had collected data of phone numbers from mobile phone books of their users. Thereby, Facebook was able to infer phone numbers of individuals who did not directly disclose these but were stored in peers’ phone books.

There are yet other implicit risks. Insights such as the classification of individuals using language into different genders, political groups and religion, can be inappropriately used by information holders. An example of this is provided by the controversial study carried out by AI researchers Kosinsky and Wang (2017), which used the profile pictures of members of an online dating community to predict sexual orientation. The findings were quite remarkable, as the authors were able to predict with 91% accuracy whether a male member defined himself as heterosexual or gay, while for women the figure sat at 83%. The implication of such study are substantial: classifying individuals by their biological and facial traits can lead to discrimination by actors opposing homosexuality, may those be an employer or a government.

What can we do about this?

Once you understand that predictive analytics can pose serious threats to privacy, especially in the hands of revenue-oriented companies or naïve practitioners, it may feel like the options to safeguard individual privacy are limited.

A solution would be to educate individuals on OSNs and inference (Zheleva and Getoor 2009). This is meant to enable them to make better choices in their privacy personalization settings. For example, knowing that groups’ homogeneity makes the prediction of personal attributes highly accurate, individuals could use this information to reflect on their group characteristics, and if concerned with their privacy, try to diversify their group properties. But this is quite complicated to achieve: groups evolve overtime, as individuals change and add information continuously in their personal profiles, altering the predictable outcomes for any given individual in the network. Also, an education in privacy and inference cannot be understood as a one-off session, as new models and new tools are constantly generated. Another problem is that the insights we are aware of are limited to those publicly available, making the solution limited.

As researchers started to understand that it is not entirely up to oneself to shape personal privacy in social networks, they initiated discussing the appropriateness of the concept of individual privacy within the context of digital platforms. The debate centered around the fact that the decision of disclosing personal information is not governed by individuals anymore but shifts towards a collective level. This shift has significant implications for privacy and policy (Sarigol, 2014). Privacy as we know it in OSNs falls into a model known as access control, where to achieve privacy users individually decide what to disclose and to whom. Researchers looking at Westin’s definition of privacy stress that the functions of privacy do not take place in isolation. Instead, relational features of privacy are crucial (Cohen, 2012), as privacy is not a binary state but instead contextual within networks. The definition of privacy as we know it is increasingly outdated. A new privacy paradigm known as networked privacy (see Garcia 2019) takes this into account. The researchers behind it (Marwick and Boyd 2014) highlight the importance of individual agency in governing one’s own privacy but stress social network structures undermine this agency. Networked privacy thus focusses on giving the individuals the ability to control the information that arises from the social network structure and flows within it. Practically, this is described as equipping individuals with knowledge and authority to ”shaping the context in which information is being interpreted” (Marwick and Boyd, 2014:1063). Currently, it seems like making individuals aware and in control of their network may be the only way to ensure their privacy wishes are fulfilled. As OSNs and the appeal for their data are here to stay, a shift towards networked privacy seems like a necessary solution to defend individuals’ right to privacy while maintaining the funcionality of OSN.

The work cited in our article is listed here below:

- Acquisti, Alessandro. ”Privacy in electronic commerce and the economics of immediate gratification.” In Proceedings of the 5th ACM conference on Electronic commerce, pp. 21-29. 2004.

- Agrawal, Divyakant, Ceren Budak, Amr El Abbadi, Theodore Georgiou, and Xifeng Yan. ”Big data in online social networks: user interaction analysis to model user behavior in social networks.” In International Workshop on Databases in Networked Information Systems, pp. 1-16. Springer, Cham, 2014.

- Backstrom, Lars, and Jon Kleinberg. ”Romantic partnerships and the dispersion of social ties: a network analysis of relationship status on facebook.” In Proceedings of the 17th ACM conference on Computer supported cooperative work social computing, pp. 831-841. 2014.

- Bannerman, Sara. ”Relational privacy and the networked governance of the self.” Information, Communication Society 22, no. 14 (2019): 2187-2202.

- Bagrow, James P., Xipei Liu, and Lewis Mitchell. ”Information flow reveals prediction limits in online social activity.” Nature Human Behaviour 3, no. 2 (2019): 122-128.

- Blue, Violet. ”Anger mounts after Facebooks shadow profiles leak in bug”. 2013. https://www.zdnet.com/article/anger-mounts-after-facebooks-shadow-profiles- leak-in-bug/

- Boyd, Danah, and Kate Crawford. ”Critical questions for big data: Provocations for a cultural, technological, and scholarly phenomenon.” Information, communication society 15, no. 5 (2012): 662-679.

- Boyd, Danah M., and Nicole B. Ellison. ”Social network sites: Definition, history, and scholarship.” Journal of computer-mediated communication 13, no. 1 (2007): 210-230.

- Coates, Jennifer. ”Women talk: Conversation between women friends.” (1996): 265- 268.

- Cohen, Julie E. Configuring the networked self: Law, code, and the play of everyday practice. Yale University Press, 2012.

- Crandall, David J., Lars Backstrom, Dan Cosley, Siddharth Suri, Daniel Hutten- locher, and Jon Kleinberg. ”Inferring social ties from geographic coincidences.” Pro- ceedings of the National Academy of Sciences 107, no. 52 (2010): 22436-22441.

- Debatin, Bernhard, Jennette P. Lovejoy, Ann-Kathrin Horn, and Brittany N. Hughes. ”Facebook and online privacy: Attitudes, behaviors, and unintended consequences.” Journal of computer-mediated communication 15, no. 1 (2009): 83-108.

- DiMaggio, Paul. ”Culture and cognition.” Annual review of sociology 23.1 (1997): 263-287.

- Duhigg, Chris. ”How Companies Learn Your Secrets”. New York Times. (2012). https://www.nytimes.com/2012/02/19/magazine/shopping-habits.html

- Erd˝os, D´ora, Rainer Gemulla, and Evimaria Terzi. ”Reconstructing graphs from neighborhood data.” ACM Transactions on Knowledge Discovery from Data (TKDD) 8.4 (2014): 1-22.

- Garcia, David. ”Leaking privacy and shadow profiles in online social networks.” Sci- ence advances 3, no. 8 (2017): e1701172.

- Gayo Avello, Daniel. ”All liaisons are dangerous when all your friends are known to us.” In Proceedings of the 22nd ACM conference on Hypertext and hypermedia, pp. 171-180. 2011.

- Horv´at, Em¨oke-A´gnes, Michael Hanselmann, Fred A. Hamprecht, and Katharina A. Zweig. ”One plus one makes three (for social networks).” PloS one 7, no. 4 (2012): e34740.

- Kim, Myunghwan, and Jure Leskovec. ”The network completion problem: Inferring missing nodes and edges in networks.” In Proceedings of the 2011 SIAM International Conference on Data Mining, pp. 47-58. Society for Industrial and Applied Mathemat- ics, 2011.

- Kokolakis, Spyros. ”Privacy attitudes and privacy behaviour: A review of current research on the privacy paradox phenomenon.” Computers and security 64 (2017): 122-134.

- Labov, William. Language in the inner city: Studies in the Black English vernacular. No. 3. University of Pennsylvania Press, 1972.

- Laney, Doug. ”3D data management: Controlling data volume, velocity and variety.” META group research note 6, no. 70 (2001): 1.

- R. K. Macaulay. Talk that counts: Age, Gender, and Social Class Differences in Discourse. Oxford University Press, 2005.

- Marwick, Alice E., and Danah Boyd. ”Networked privacy: How teenagers negotiate context in social media.” New media & society 16.7 (2014): 1051-1067.

- Mercken, Liesbeth, Christian Steglich, Philip Sinclair, Jo Holliday, and Laurence Moore. ”A longitudinal social network analysis of peer influence, peer selection, and smoking behavior among adolescents in British schools.” Health Psychology 31, no. 4 (2012): 450.

- Mishra, Nishchol, and Sanjay Silakari. ”Predictive analytics: A survey, trends, appli- cations, oppurtunities & challenges.” International Journal of Computer Science and Information Technologies 3, no. 3 (2012): 4434-4438.

- Mislove, Alan, Bimal Viswanath, Krishna P. Gummadi, and Peter Druschel. ”You are who you know: inferring user profiles in online social networks.” In Proceedings of the third ACM international conference on Web search and data mining, pp. 251-260. 2010.

- Mondal, Mainack, Peter Druschel, Krishna P. Gummadi, and Alan Mislove. ”Be- yond access control: Managing online privacy via exposure.” In Proceedings of the Workshop on Useable Security, pp. 1-6. 2014.

- Pangburn, DJ. Even This Data Guru Is Creeped Out By What Anonymous Location Data Reveals About Us. (2017). https://www.fastcompany.com/3068846/how-your- location-data-identifies-you-gilad-lotan-privacy

- McPherson, Miller, Lynn Smith-Lovin, and James M. Cook. ”Birds of a feather: Homophily in social networks.” Annual review of sociology 27.1 (2001): 415-444.

- Pontes, Tatiana, Gabriel Magno, Marisa Vasconcelos, Aditi Gupta, Jussara Almeida, Ponnurangam Kumaraguru, and Virgilio Almeida. ”Beware of what you share: Infer- ring home location in social networks.” In 2012 IEEE 12th International Conference on Data Mining Workshops, pp. 571-578. IEEE, 2012.

- Rao, Delip, David Yarowsky, Abhishek Shreevats, and Manaswi Gupta. ”Classifying latent user attributes in twitter.” In Proceedings of the 2nd international workshop on Search and mining user-generated contents, pp. 37-44. 2010.

- Richterich, Annika. The big data agenda: Data ethics and critical data studies. University of Westminster Press, 2018.

- Sarigol, Emre, David Garcia, and Frank Schweitzer. ”Online privacy as a collective phenomenon.” In Proceedings of the second ACM conference on Online social net- works, pp. 95-106. 2014.

- Wang, Yilun, and Michal Kosinski. ”Deep neural networks are more accurate than humans at detecting sexual orientation from facial images.” Journal of personality and social psychology 114, no. 2 (2018): 246.

- Zheleva, Elena, and Lise Getoor. ”To join or not to join: the illusion of privacy in social networks with mixed public and private user profiles.” In Proceedings of the 18th international conference on World wide web, pp. 531-540. 2009.